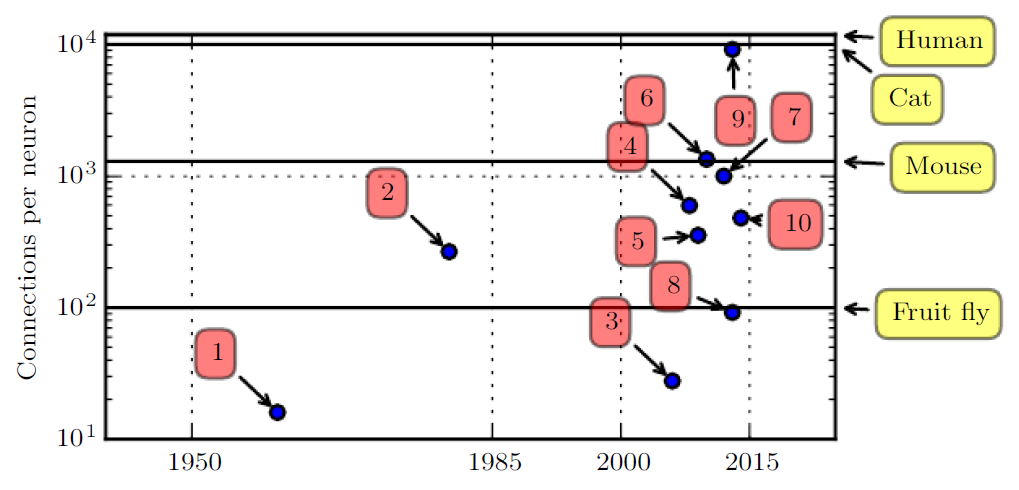

Initially, the number of connections between neurons in artificial neural networks was limited by hardware capabilities.

Today, the number of connections between neurons is mostly a design consideration.

Some artificial neural networks have nearly as many connections per neuron as a cat, and it is quite common for other neural networks to have as many connections per neuron as smaller mammals like mice.

Even the human brain does not have an exorbitant amount of connections per neuron.

Biological neural network sizes from Wikipedia (2015):

- Adaptive linear element (Widrow and Hoff, 1960)

- Neocognitron (Fukushima, 1980)

- GPU-accelerated convolutional network (Chellapilla et al., 2006)

- Deep Boltzmann machine (Salakhutdinov and Hinton, 2009a)

- Unsupervised convolutional network (Jarrett et al., 2009)

- GPU-accelerated multilayer perceptron (Ciresan et al., 2010)

- Distributed autoencoder (Le et al., 2012)

- Multi-GPU convolutional network (Krizhevsky et al., 2012)

- COTS HPC unsupervised convolutional network (Coates et al., 2013)

- GoogLeNet (Szegedy et al., 2014a)