Since the 1980s, deep learning has consistently improved in its ability to provide accurate recognition or prediction.

Moreover, deep learning has consistently been applied with success to broader and broader sets of applications.

Image recognition

The earliest deep models were used to recognize individual objects in tightly cropped, extremely small images (Rumelhart et al., 1986a).

Since then there has been a gradual increase in the size of images neural networks could process.

Modern object recognition networks process rich high-resolution photographs and do not have a requirement that the photo be cropped near the object to be recognized (Krizhevsky et al., 2012).

Similarly, the earliest networks could only recognize two kinds of objects (or in some cases, the absence or presence of a single kind of object), while these modern networks typically recognize at least 1,000 different categories of objects.

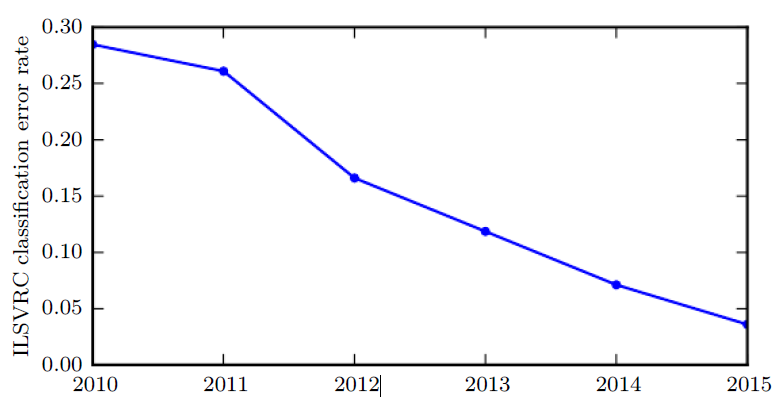

The largest contest in object recognition is the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) held each year.

A dramatic moment in the meteoric rise of deep learning came when a convolutional network won this challenge for the first time and by a wide margin, bringing down the state-of-the-art top-5 error rate from 26.1% to 15.3% (Krizhevsky et al., 2012), meaning that the convolutional network produces a ranked list of possible categories for each image and the correct category appeared in the first five entries of this list for all but 15.3% of the test examples.

Since then, these competitions are consistently won by deep convolutional nets, and as of this writing, advances in deep learning have brought the latest top-5 error rate in this contest down to 3.6%, as shown in figure below:

Speech recognition

Deep learning has also had a dramatic impact on speech recognition.

After improving throughout the 1990s, the error rates for speech recognition stagnated starting in about 2000.

The introduction of deep learning (Dahl et al., 2010; Deng et al., 2010b; Seide et al., 2011; Hinton et al., 2012a) to speech recognition resulted in a sudden drop of error rates, with some error rates cut in half.

Other fields

Deep networks have also had spectacular successes for pedestrian detection and image segmentation (Sermanet et al., 2013; Farabet et al., 2013; Couprie et al., 2013) and yielded superhuman performance in traffic sign classification (Ciresan et al., 2012).

Increasing complexity

At the same time that the scale and accuracy of deep networks has increased, so has the complexity of the tasks that they can solve.

Goodfellow et al. (2014) showed that neural networks could learn to output an entire sequence of characters transcribed from an image, rather than just identifying a single object.

Previously, it was widely believed that this kind of learning required labeling of the individual elements of the sequence (Gülçehre and Bengio, 2013).Recurrent neural networks, such as the LSTM sequence model mentioned above, are now used to model relationships between sequences and other sequences rather than just fixed inputs.

This sequence-to-sequence learning seems to be on the cusp of revolutionizing another application: machine translation (Sutskever et al., 2014; Bahdanau et al., 2015).

Neural Turing machines

Reinforcement learning

Another crowning achievement of deep learning is its extension to the domain of reinforcement learning.

In the context of reinforcement learning, an autonomous agent must learn to perform a task by trial and error, without any guidance from the human operator.

DeepMind demonstrated that a reinforcement learning system based on deep learning is capable of learning to play Atari video games, reaching human-level performance on many tasks (Mnih et al., 2015).

Deep learning has also significantly improved the performance of reinforcement learning for robotics

(Finn et al., 2015).

Profitability

Many of these applications of deep learning are highly profitable.

Deep learning is now used by many top technology companies including Google, Microsoft, Facebook, IBM, Baidu, Apple, Adobe, Netflix, NVIDIA and NEC.

Software infrastructure

Advances in deep learning have also depended heavily on advances in software infrastructure.

Software libraries such as Theano (Bergstra et al., 2010; Bastien et al., 2012), PyLearn2 (Goodfellow et al., 2013c), Torch (Collobert et al., 2011b), DistBelief (Dean et al., 2012), Caffe (Jia, 2013), MXNet (Chen et al., 2015), and TensorFlow (Abadi et al., 2015) have all supported important research projects or commercial products.

Contributions to other sciences

Deep learning has also made contributions back to other sciences.

Modern convolutional networks for object recognition provide a model of visual processing that neuroscientists can study (DiCarlo, 2013).

Deep learning also provides useful tools for processing massive amounts of data and making useful predictions in scientific fields.

It has been successfully used:

- to predict how molecules will interact in order to help pharmaceutical companies design new drugs (Dahl et al., 2014),

- to search for subatomic particles (Baldi et al., 2014),

- to automatically parse microscope images used to construct a 3-D map of the human brain (Knowles-Barley et al., 2014).

We expect deep learning to appear in more and more scientific fields in the future.

Summary

In summary, deep learning is an approach to machine learning that has drawn heavily on our knowledge of the human brain, statistics and applied math as it developed over the past several decades.

In recent years, it has seen tremendous growth in its popularity and usefulness, due in large part to more powerful computers,

larger datasets and techniques to train deeper networks.The years ahead are full of challenges and opportunities to improve deep learning even further and bring it to new frontiers.